Hi there - I’m desperate

So my setup:

Mother system: Ubuntu 20 desktop + KVM

VM Subspace(all :

4-8 CPU 2.4gz

8gb mem

Seagate HDD 1tb 7200rpm (SATA 3 connect)

750gb plot size

My problem:

ZERO TSSC after week with experiments with my Subpsace node ![]()

After fullsync my node start to produce errors in node-log

“Creating inherent data providers took more time than we had left for the slot.”

The same time my farmer just plotting and no any errors.

AND my LA on server starts growing (11+LA with 6CPUs cores\threads… without high load on CPU) - so my guess that standart HDD it’s not enough speed for Subspace chain… Any ideas how it’s possible to fix it?

I’m already tried:

- another HDD with 5400 RPM (WD)

- raid0 with both HDDs

- sync without --validator and start farming only after fullsync

- allocate 32gb RAM and 16cpu cores

- check my HDDs for badblocks and other benchmarks

P.S. i’m added screenshots with logs lower

simple htop command with LA 10-12

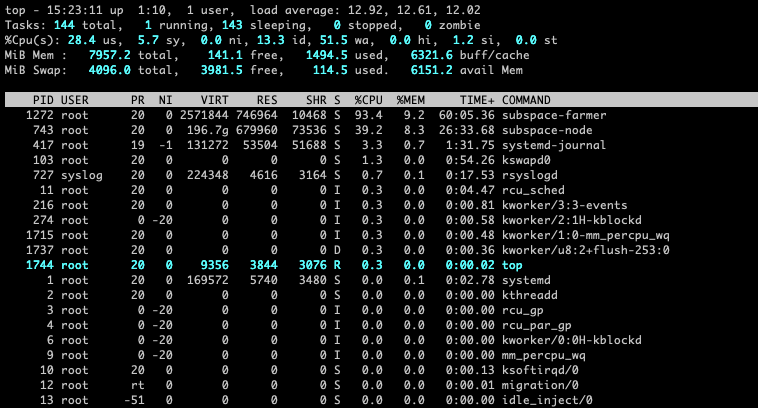

The same time top-command show me high LA

IOtop

NodeLogs

2022-06-16 15:26:16 [PrimaryChain] 💤 Idle (80 peers), best: #231960 (0x1bbc…6fb6), finalized #0 (0x9ee8…ccf0), ⬇ 352.0kiB/s ⬆ 117.4kiB/s

2022-06-16 15:26:18 [PrimaryChain] ✨ Imported #231961 (0x96cb…89cd)

2022-06-16 15:26:18 [PrimaryChain] ♻️ Reorg on #231961,0x96cb…89cd to #231961,0x01b7…53c4, common ancestor #231960,0x1bbc…6fb6

2022-06-16 15:26:18 [PrimaryChain] ✨ Imported #231961 (0x01b7…53c4)

2022-06-16 15:26:21 [PrimaryChain] 💤 Idle (80 peers), best: #231961 (0x01b7…53c4), finalized #0 (0x9ee8…ccf0), ⬇ 173.2kiB/s ⬆ 86.2kiB/s

2022-06-16 15:26:24 [PrimaryChain] ✨ Imported #231962 (0x1bdc…624f)

2022-06-16 15:26:26 [PrimaryChain] 💤 Idle (80 peers), best: #231962 (0x1bdc…624f), finalized #0 (0x9ee8…ccf0), ⬇ 253.7kiB/s ⬆ 75.8kiB/s

2022-06-16 15:26:27 [PrimaryChain] 💔 Verification failed for block 0x48db074527a135a05da14430d331f61a41d41a4d96658c0db32f9cd591c0f9cf received from peer: 12D3KooWBQkezfW7xESWMc3LAu3UEtCkppW8mMKXWDx6YF8CXuQw, "UnknownBlock: State already discarded for BlockId::Hash(0x8084702a7df7b3680515ec96cf844274cc5cc770e5a7dcbf50f07a8339892751)"

2022-06-16 15:26:31 [PrimaryChain] 💤 Idle (80 peers), best: #231962 (0x1bdc…624f), finalized #0 (0x9ee8…ccf0), ⬇ 284.5kiB/s ⬆ 71.1kiB/s

2022-06-16 15:26:34 [PrimaryChain] ✨ Imported #231963 (0x6a25…f2f9)

2022-06-16 15:26:36 [PrimaryChain] ✨ Imported #231964 (0xb43e…ccff)

2022-06-16 15:26:36 [PrimaryChain] 💤 Idle (47 peers), best: #231964 (0xb43e…ccff), finalized #0 (0x9ee8…ccf0), ⬇ 230.6kiB/s ⬆ 77.0kiB/s

2022-06-16 15:26:38 [PrimaryChain] ✨ Imported #231965 (0x9483…7364)

2022-06-16 15:26:39 [PrimaryChain] ✨ Imported #231966 (0x00e8…5523)

2022-06-16 15:26:42 [PrimaryChain] 💤 Idle (80 peers), best: #231966 (0x00e8…5523), finalized #0 (0x9ee8…ccf0), ⬇ 454.8kiB/s ⬆ 90.0kiB/s

2022-06-16 15:26:43 [PrimaryChain] ✨ Imported #231967 (0x49fc…3097)

2022-06-16 15:26:47 [PrimaryChain] 💤 Idle (80 peers), best: #231967 (0x49fc…3097), finalized #0 (0x9ee8…ccf0), ⬇ 156.9kiB/s ⬆ 72.2kiB/s

2022-06-16 15:26:47 [PrimaryChain] ✨ Imported #231968 (0x0746…4a29)

2022-06-16 15:26:50 [PrimaryChain] 💔 Verification failed for block 0x48db074527a135a05da14430d331f61a41d41a4d96658c0db32f9cd591c0f9cf received from peer: 12D3KooWBQkezfW7xESWMc3LAu3UEtCkppW8mMKXWDx6YF8CXuQw, "UnknownBlock: State already discarded for BlockId::Hash(0x8084702a7df7b3680515ec96cf844274cc5cc770e5a7dcbf50f07a8339892751)"

2022-06-16 15:26:52 [PrimaryChain] 💤 Idle (80 peers), best: #231968 (0x0746…4a29), finalized #0 (0x9ee8…ccf0), ⬇ 297.9kiB/s ⬆ 93.3kiB/s

2022-06-16 15:26:52 [PrimaryChain] ✨ Imported #231969 (0x8d3d…7667)

2022-06-16 15:26:57 [PrimaryChain] 💤 Idle (80 peers), best: #231969 (0x8d3d…7667), finalized #0 (0x9ee8…ccf0), ⬇ 193.1kiB/s ⬆ 88.2kiB/s

2022-06-16 15:26:58 [PrimaryChain] ✨ Imported #231970 (0x233c…5f72)

2022-06-16 15:27:02 [PrimaryChain] ✨ Imported #231971 (0xe2eb…ea31)

2022-06-16 15:27:02 [PrimaryChain] 💔 Verification failed for block 0x48db074527a135a05da14430d331f61a41d41a4d96658c0db32f9cd591c0f9cf received from peer: 12D3KooWBQkezfW7xESWMc3LAu3UEtCkppW8mMKXWDx6YF8CXuQw, "UnknownBlock: State already discarded for BlockId::Hash(0x8084702a7df7b3680515ec96cf844274cc5cc770e5a7dcbf50f07a8339892751)"

2022-06-16 15:27:02 [PrimaryChain] 💤 Idle (30 peers), best: #231971 (0xe2eb…ea31), finalized #0 (0x9ee8…ccf0), ⬇ 379.0kiB/s ⬆ 81.3kiB/s

2022-06-16 15:27:08 [PrimaryChain] 💤 Idle (80 peers), best: #231971 (0xe2eb…ea31), finalized #0 (0x9ee8…ccf0), ⬇ 131.6kiB/s ⬆ 96.7kiB/s

2022-06-16 15:27:13 [PrimaryChain] 💤 Idle (80 peers), best: #231971 (0xe2eb…ea31), finalized #0 (0x9ee8…ccf0), ⬇ 70.4kiB/s ⬆ 61.8kiB/s

2022-06-16 15:27:15 [PrimaryChain] ✨ Imported #231972 (0xee4c…2ba4)

2022-06-16 15:27:16 [PrimaryChain] ✨ Imported #231973 (0x5bce…65c6)

2022-06-16 15:27:18 [PrimaryChain] 💤 Idle (80 peers), best: #231973 (0x5bce…65c6), finalized #0 (0x9ee8…ccf0), ⬇ 284.0kiB/s ⬆ 78.4kiB/s

2022-06-16 15:27:19 [PrimaryChain] 💔 Verification failed for block 0x48db074527a135a05da14430d331f61a41d41a4d96658c0db32f9cd591c0f9cf received from peer: 12D3KooWBQkezfW7xESWMc3LAu3UEtCkppW8mMKXWDx6YF8CXuQw, "UnknownBlock: State already discarded for BlockId::Hash(0x8084702a7df7b3680515ec96cf844274cc5cc770e5a7dcbf50f07a8339892751)"

2022-06-16 15:27:21 [PrimaryChain] ✨ Imported #231974 (0xa359…d65f)

2022-06-16 15:27:23 [PrimaryChain] 💤 Idle (80 peers), best: #231974 (0xa359…d65f), finalized #0 (0x9ee8…ccf0), ⬇ 369.6kiB/s ⬆ 87.7kiB/s

2022-06-16 15:27:27 [PrimaryChain] ✨ Imported #231975 (0x0c1e…b05d)

2022-06-16 15:27:28 [PrimaryChain] 💤 Idle (38 peers), best: #231975 (0x0c1e…b05d), finalized #0 (0x9ee8…ccf0), ⬇ 203.4kiB/s ⬆ 85.4kiB/s

2022-06-16 15:27:30 [PrimaryChain] ✨ Imported #231976 (0x640d…f014)

2022-06-16 15:27:33 [PrimaryChain] 💤 Idle (80 peers), best: #231976 (0x640d…f014), finalized #0 (0x9ee8…ccf0), ⬇ 236.2kiB/s ⬆ 104.8kiB/s

2022-06-16 15:27:38 [PrimaryChain] 💤 Idle (80 peers), best: #231976 (0x640d…f014), finalized #0 (0x9ee8…ccf0), ⬇ 96.6kiB/s ⬆ 83.9kiB/s

FarmerLogs

2022-06-16T15:20:05.759836Z INFO subspace_farmer::archiving: Plotted segment segment_index=111384

2022-06-16T15:20:45.193142Z INFO subspace_farmer::archiving: Plotted segment segment_index=111385

2022-06-16T15:21:27.708898Z INFO subspace_farmer::archiving: Plotted segment segment_index=111386

2022-06-16T15:22:18.731336Z INFO subspace_farmer::archiving: Plotted segment segment_index=111387

2022-06-16T15:23:09.229447Z INFO subspace_farmer::archiving: Plotted segment segment_index=111388

2022-06-16T15:24:32.094677Z INFO subspace_farmer::archiving: Plotted segment segment_index=111389

2022-06-16T15:25:44.406583Z INFO subspace_farmer::archiving: Plotted segment segment_index=111390

2022-06-16T15:26:38.765808Z INFO subspace_farmer::archiving: Plotted segment segment_index=111391

2022-06-16T15:27:30.667627Z INFO subspace_farmer::archiving: Plotted segment segment_index=111392

2022-06-16T15:28:15.303544Z INFO subspace_farmer::archiving: Plotted segment segment_index=111393

2022-06-16T15:28:49.796022Z INFO subspace_farmer::archiving: Plotted segment segment_index=111394